Llamaindex vs Stack AI

Kevin Bartley

@KevinBartley

When browsing for new tools for your tech stack, it’s easy to get lost. With marketing pages making big promises on the end outcome—such as building AI agents from top to bottom—it can be hard to understand how close each platform will get you to the results.

Llamaindex can get you started, but won’t take you far. It allows you to index your documents and files, getting them ready to pass them on to an LLM when you need grounded answers. While it is a component in building powerful AI agents and chatbots, it is only that: a component that needs to be combined with many other tools before you have anything ready to share with your team.

If you’re looking for an end-to-end platform, Stack AI could be a better option: it includes all the features of Llamaindex combined with a visual AI workflow and agent builder. You can build and share your tools with your entire team in a no-code interface, with all the features to scale and upgrade them over time.

Why Stack AI is the best alternative to Llamaindex

| Llamaindex | Stack AI | |

|---|---|---|

| Visual builder | ❌ | ✅ |

| Advanced RAG system | ✅ (hard to setup) | ✅ (easy setup) |

| Pre-built interfaces | ❌ | ✅ |

| Minimal setup required | ❌ | ✅ |

| Variety of AI models | ❌ | ✅ |

| Connection with knowledge bases | ✅ | ✅ |

| Performance monitoring | ✅ | ✅ |

| Guardrails and PII protection | ❌ | ✅ |

| HIPAA compliance | ✅ | ✅ |

| Access to enterprise support engineers and AI engineers | ❌ | ✅ |

Platform approach and capabilities

Llamaindex is a developer tool for data preparation

A view of the LlamaCloud dashboard, where you can access the Llamaindex feature set.

Llamaindex focuses on preparing data for large language models (LLMs), streamlining the processes of data ingestion, indexing, and querying. This provides a seamless integration between datasets and LLM-powered applications.

Beyond these indexing features, the LlamaCloud platform also offers LlamaParse to parse PDFs into structured data, and LlamaExtract for extracting structured data from unstructured documents.

It doesn’t offer anything else beyond this feature set. You can’t build internal tools and share them with your team.

Stack AI is an end-to-end generative AI builder

Stack AI is an end-to-end platform, where you connect data sources to LLMs to build internal tools and agents to process that data. It offers all the capabilities that Llamaindex does to support this use case, with proprietary algorithms for searching and retrieving information from popular integrations such as Microsoft Sharepoint or Dropbox, among many others.

Access all the leading AI providers in your projects, from OpenAI to Anthropic, without any integration process or extra costs. This adds versatility to every tool you build, as you can choose the best LLM for each task. As new models are released, you can quickly upgrade them by adjusting the corresponding selector dropdowns in your project.

After connecting data sources, LLMs, and advanced tools, you can export your interface as a form, chatbot, or batch-processing interface, for example. These are deployed in a live link that you can share with your team, offering security features to prevent unauthorized use.

You can monitor each tool by accessing the analytics and manager pages, with a breakdown of total runs, users, and other performance statistics. You can generate AI-powered reports to understand how your tool is used, a good base to keep improving over time.

Retrieval-augmented generation (RAG) capabilities

Llamaindex only takes care of preparing data

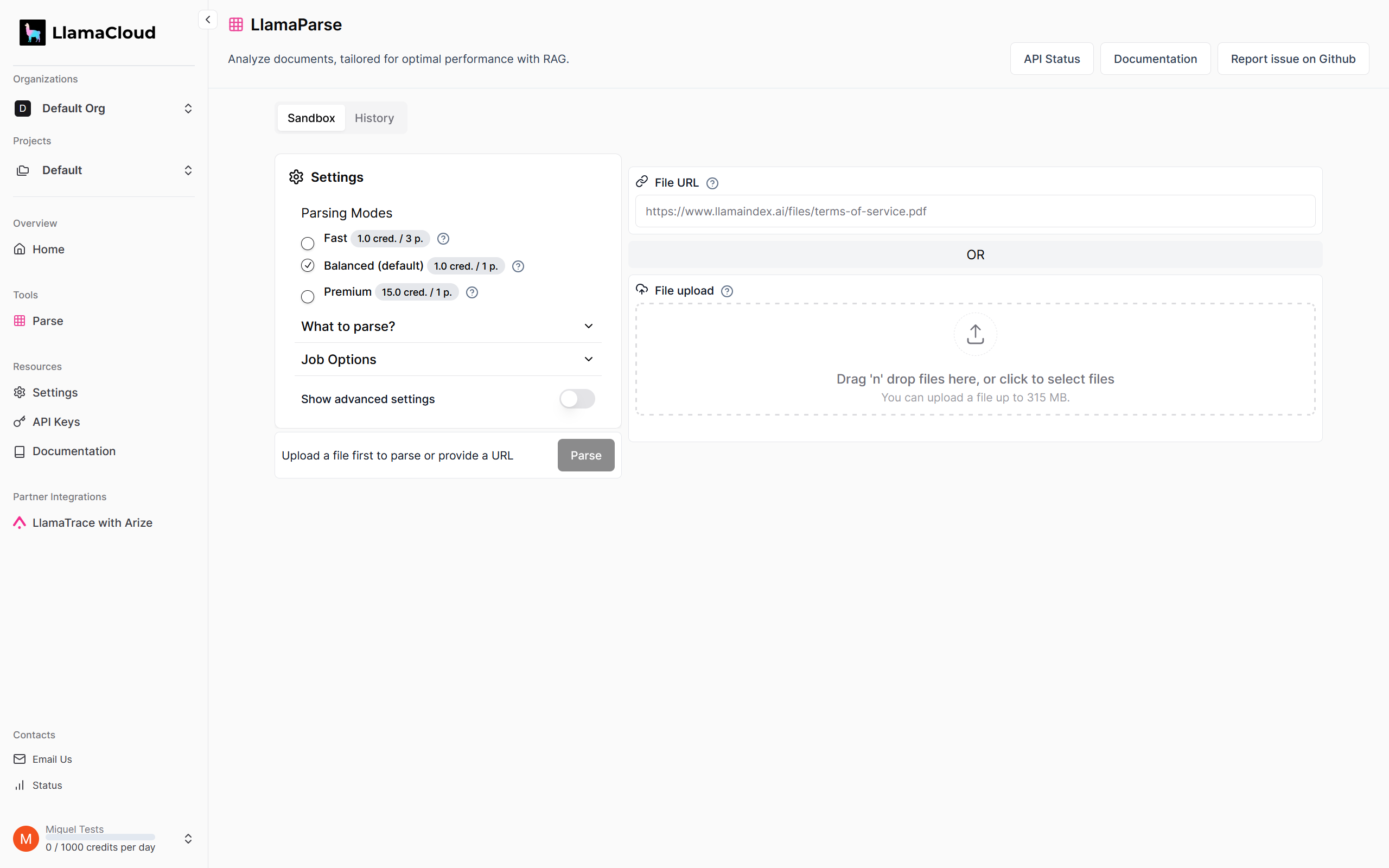

The LlamaParse interface, where you can experiment with ways to process your documents.

RAG is composed of multiple parts, of which Llamaindex handles data preparation, indexing, and querying only. It doesn’t natively handle the user interface, running the LLM, and relaying the answer back to the user.

Stack AI offers end-to-end RAG implementation

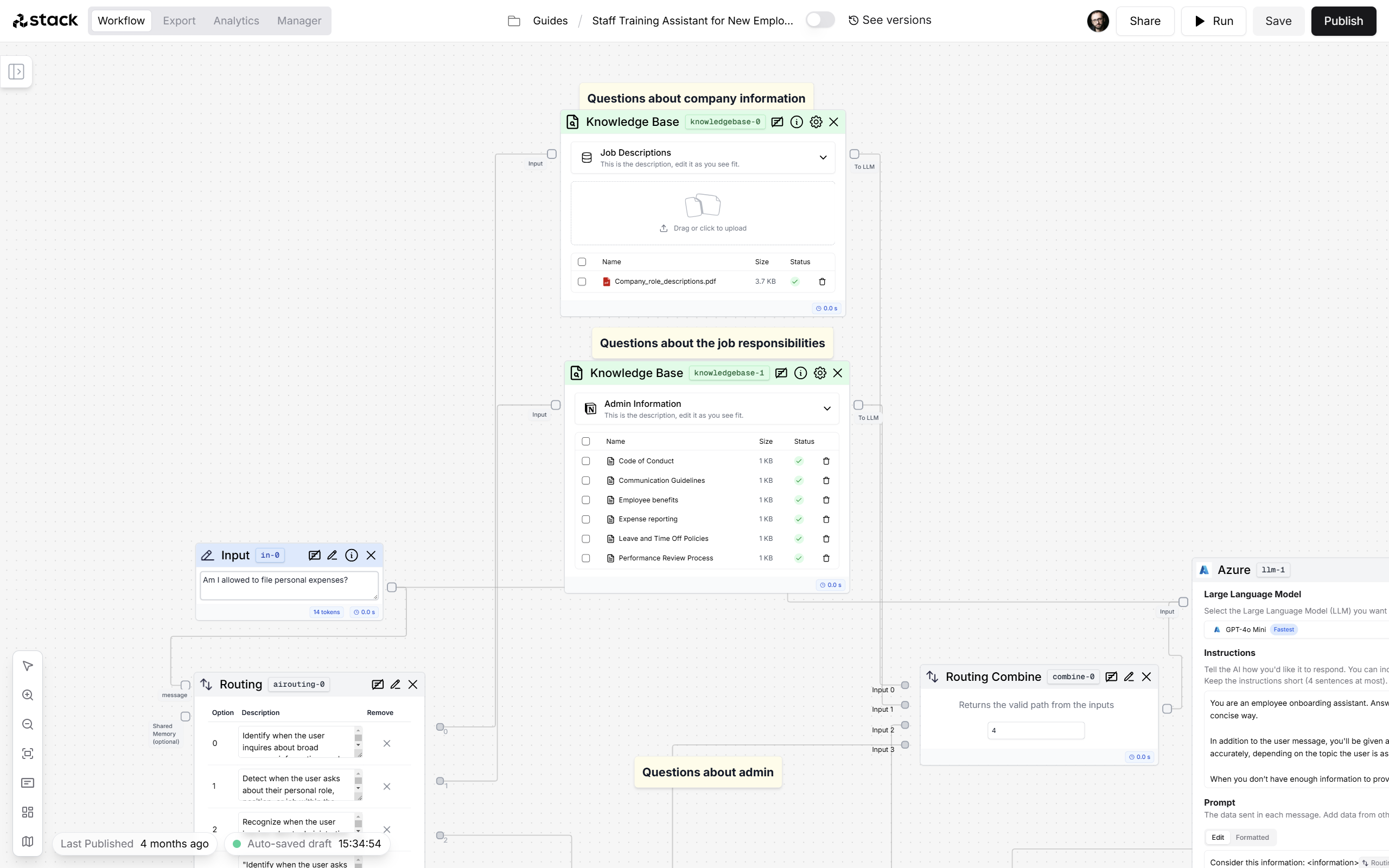

Two knowledge base nodes in Stack AI, supporting PDF documents and Notion pages.

Stack AI offers RAG in an easy-to-setup format. Once you connect your data sources to LLMs inside a project, the platform uses proprietary algorithms to index the data, so it’s easily searchable when you’re using the tool. The algorithms are optimized for finding the most relevant data as quickly as possible, so you can get highly accurate answers, fast.

Ease of use

Llamaindex requires technical skills to use

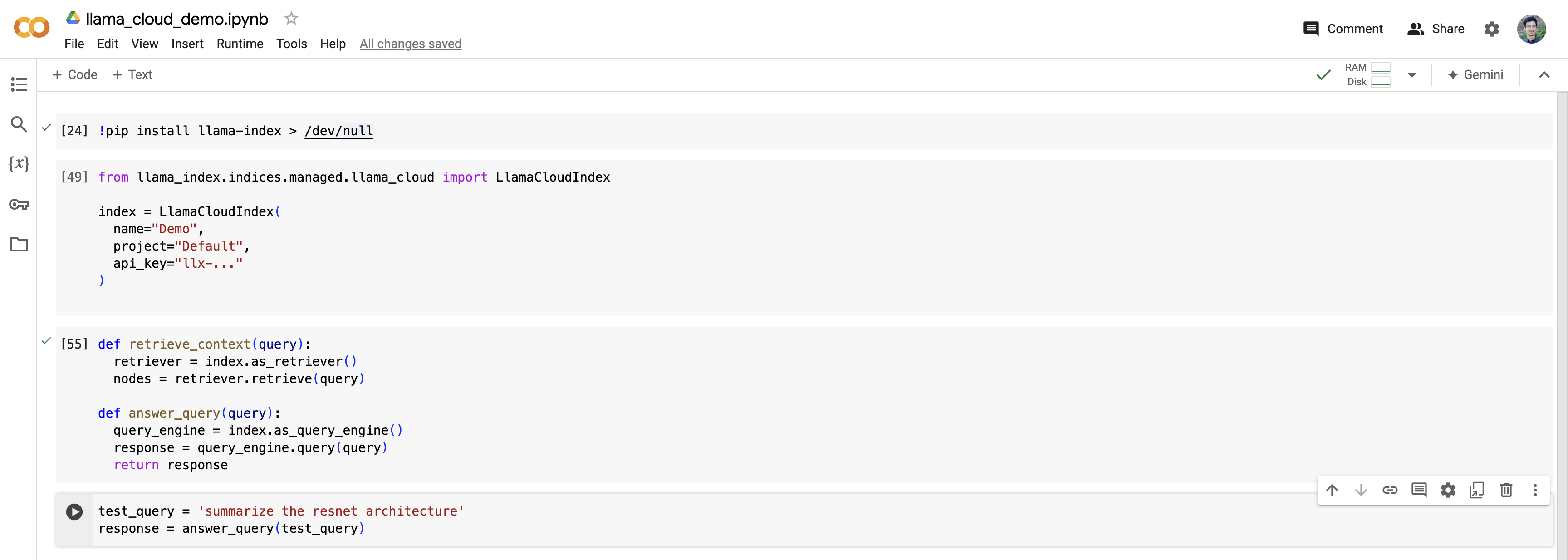

A how-to-use screenshot from the Llamaindex documentation, highlighting how it requires technical skills to deploy.

Llamaindex requires a wide technical skillset. Your team needs to understand data integrations deeply to connect your sources to the platform; to structure data appropriately so it can be indexed and efficiently retrieved; and how to set up and maintain vector databases.

Stack AI can be used by non-technical people

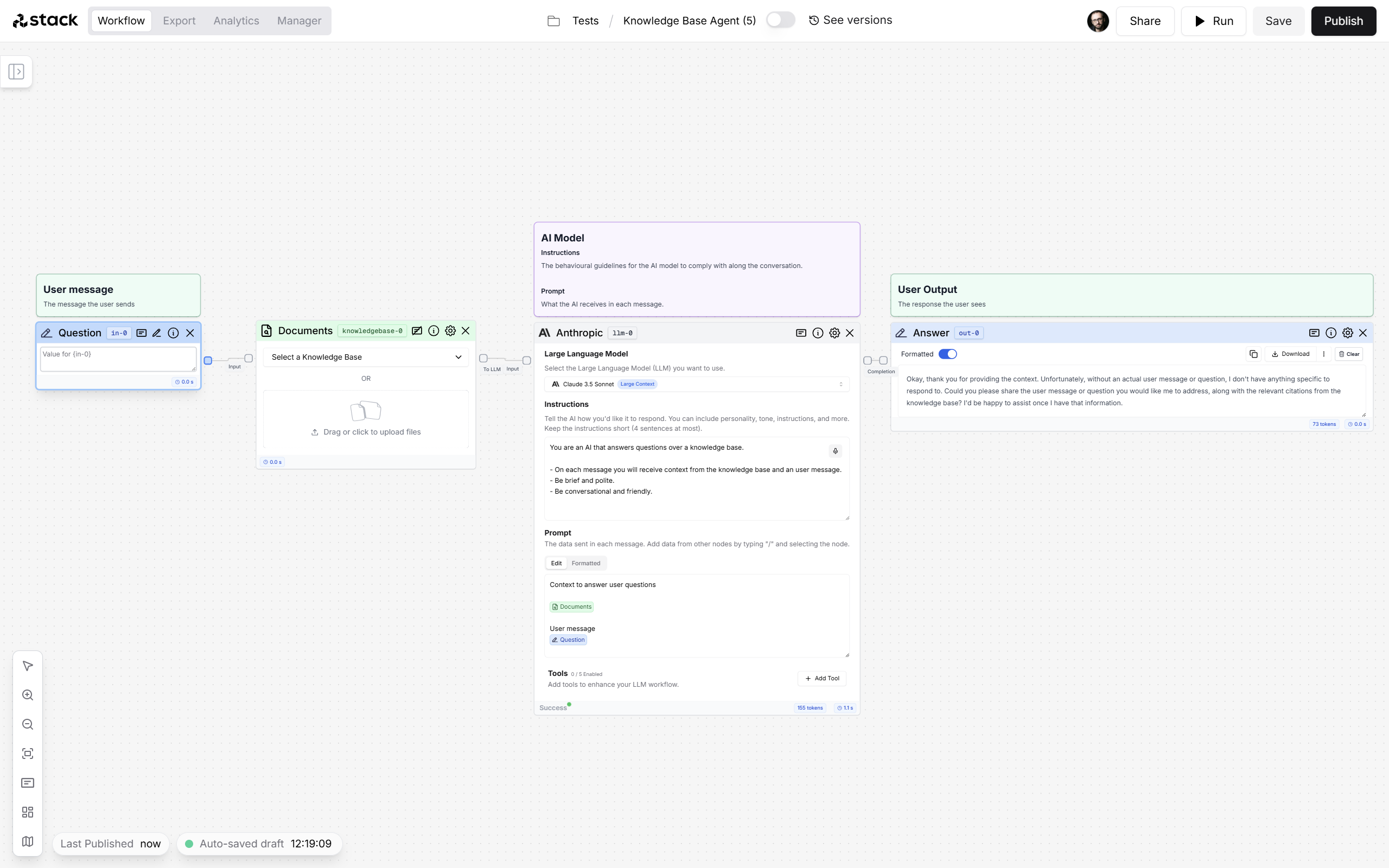

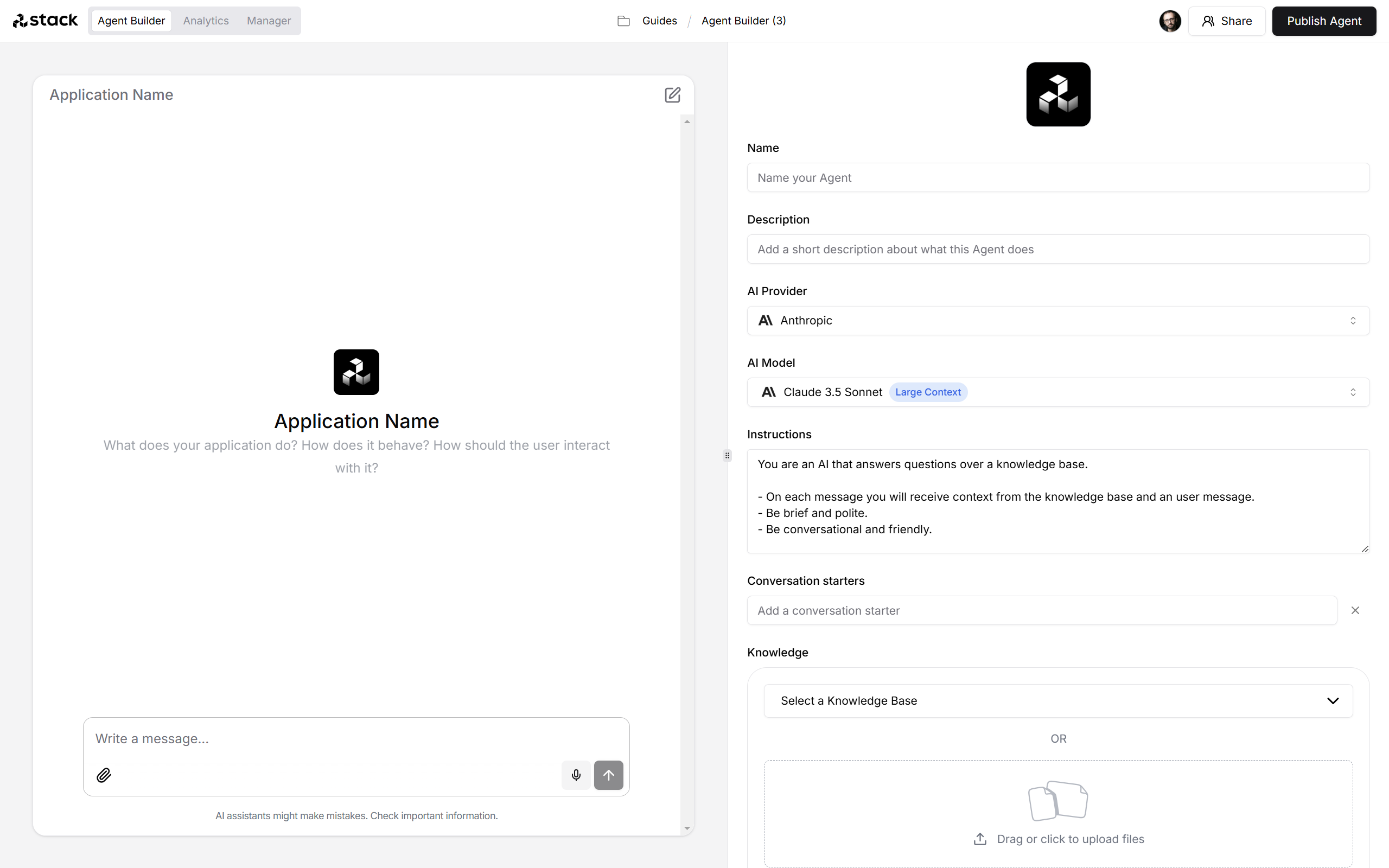

The Stack AI Agent builder, a streamlined no-code experience to build AI agents.

Stack AI is a fully no-code solution. It’s designed to empower non-technical users to build their first AI-powered applications. For developers, it offers a faster, more intuitive experience, while still providing customization via Python code nodes.

User interface

Llamaindex only supports uploading, indexing, and playground

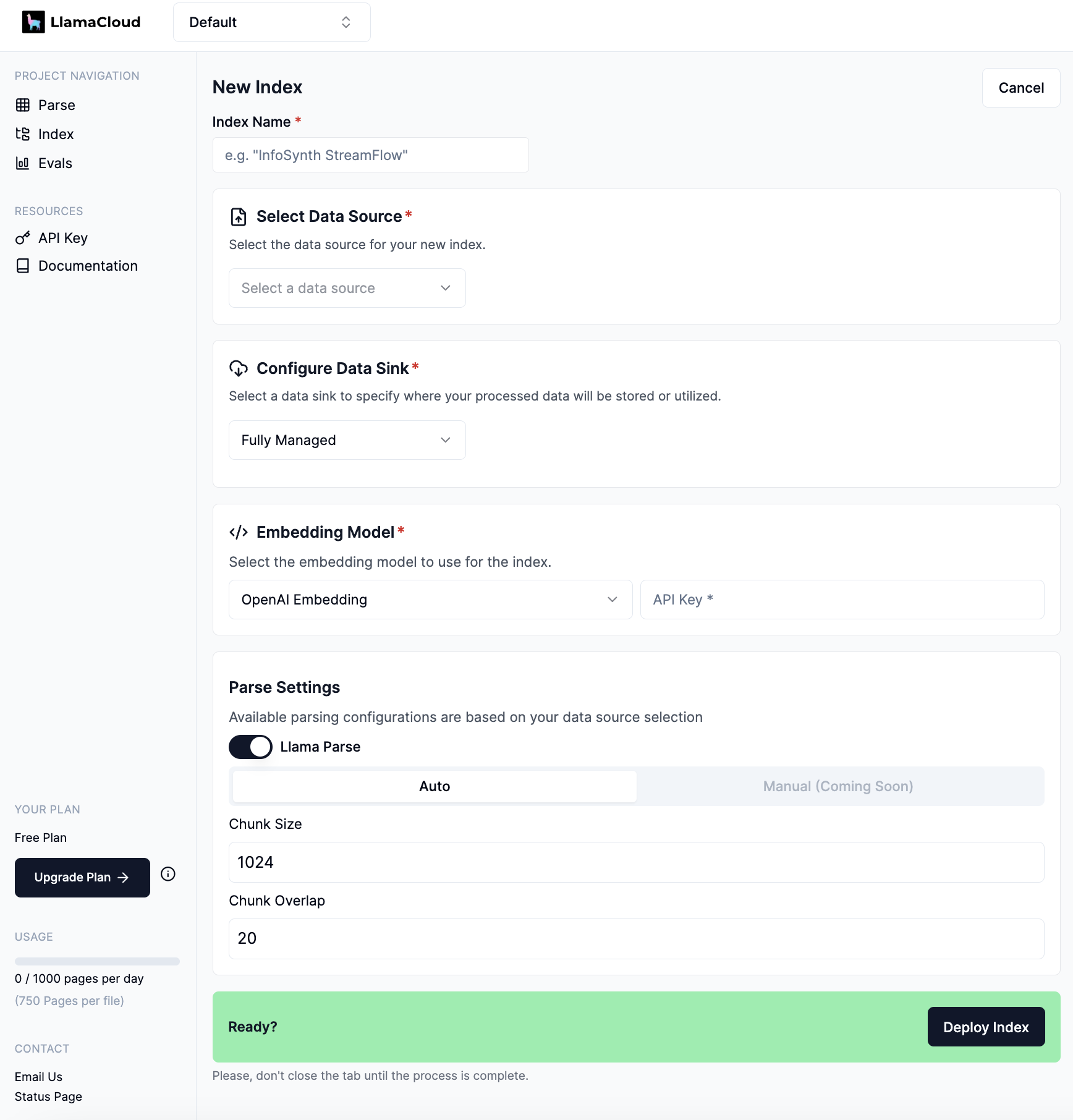

The first step of creating a new index in Llamaindex.

Llamaindex’s user interface lets you upload documents and parse them within the platform. From that point on, most of the process is handled in external apps, integrated development environments (IDEs), or command lines as you build the complete solution.

Stack AI is a drag-and-drop visual AI workflow builder

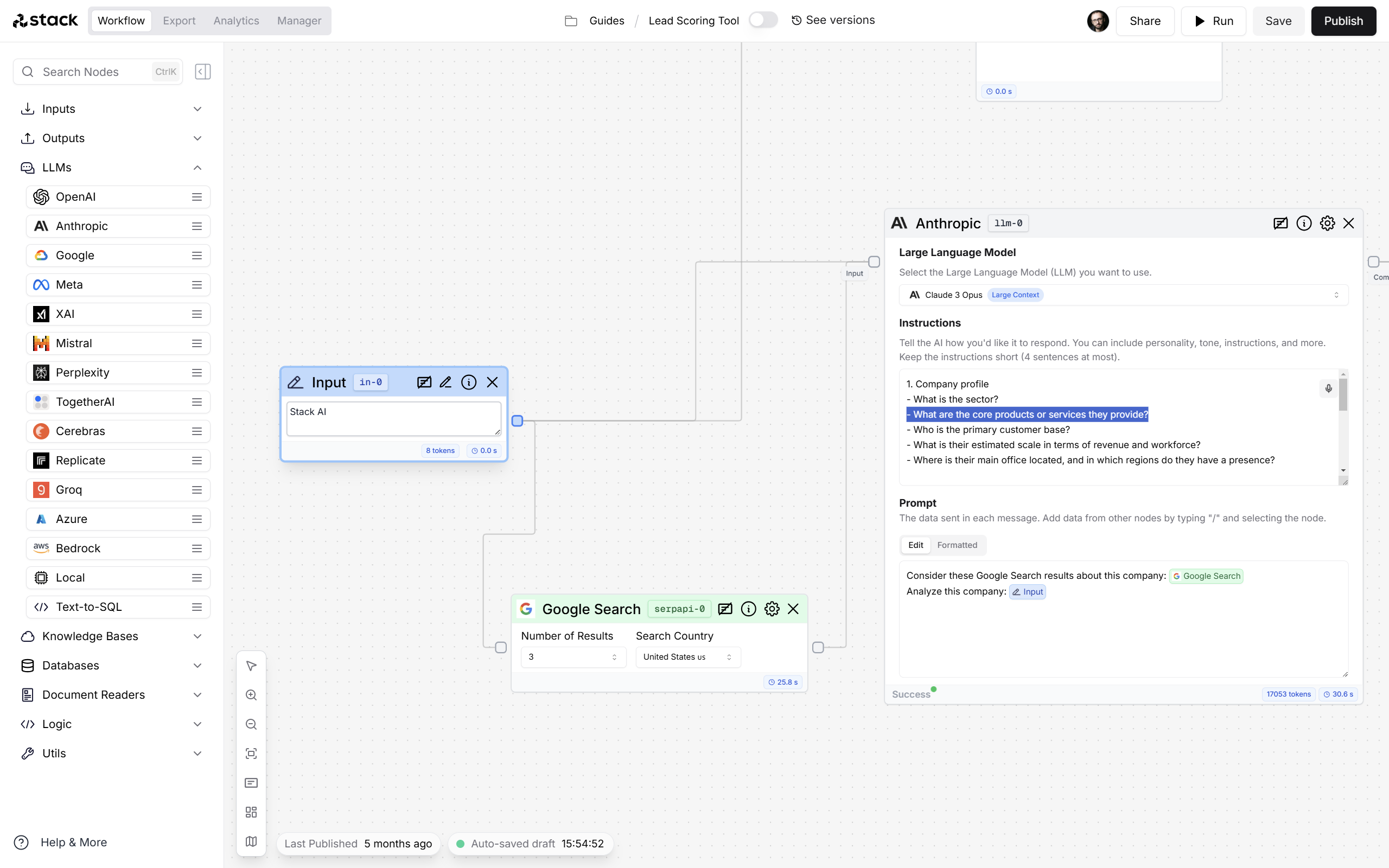

In Stack AI, you can configure how each node behaves by interacting with it on the canvas.

Stack AI’s building process happens on a canvas. Drag data sources, LLMs, and outputs from the left-side menu onto it. Once you connect them by connecting the node handles and pass the generated data, you can run the project and see a visual sequence of how it works. The process is visual and intuitive, helping users grasp the complex actions on the screen in a simple way.

Pricing

Llamaindex is pay-as-you-go

Llamaindex has pay-as-you-go pricing. This means that every time you index or extract data, it will bill your payment method based on the computational intensity of the action. Every time you run your AI application—and every time Llamaindex has something to do in that run—you’ll be billed for that work.

All costs for LLM tokens and the infrastructure required to run your AI application aren’t included.

Stack AI has 3 paid plans with fixed pricing

Beyond having a free plan to help you build your first projects, Stack AI offers 3 paid plans to fit your needs as you scale. The pricing varies based on the number of tool runs needed, projects you can have, and active builders in your workspace.

Stack AI includes free tokens for all leading LLMs at no extra cost, and doesn’t charge you any additional fees for infrastructure.

Llamaindex vs Stack AI: which one is the best?

| Llamaindex | Stack AI | |

|---|---|---|

| What is it? | Developer-grade data indexing tool | Enterprise-grade AI workflow automation platform |

| User experience | Simple form-based interface | Visual drag-and-drop canvas, full functionality available without having to code |

| AI model availability | Only embedding models, required to index data in a vector store. No LLMs. | All leading models, API keys not required |

| Integrations | API | Connects with popular data sources across ecosystems: Microsoft, Google, Amazon, Salesforce, HubSpot, Zapier, among others. API available. Easy integration process. |

| Data privacy and security | HIPAA | Enterprise-grade security, including SOC2, GDPR and HIPAA compliance. Data protection addendums (DPA) with OpenAI and Anthropic. |

| Pricing | Free plan available with 1,000 credits. Paid plans on a pay-as-you-go basis after exceeding free plan usage. | Free plan available. Starts at $200 per month for 2,000 project runs. Dedicated support included in Enterprise plan at no extra cost. |

Llamaindex is a specialized developer tool focused on preparing and indexing data from various knowledge bases. It streamlines data ingestion, indexing, and querying for large language models (LLMs). However, it can’t build or deploy AI-powered applications directly. Users need deep technical expertise to use Llamaindex, especially in dealing with data and querying it from an outside platform.

Stack AI offers an end-to-end platform where your team can create automation workflows with leading AI models. It features a user-friendly, no-code, drag-and-drop interface, reducing the level of technical skill required to begin. It handles all of Llamaindex’s functionality natively, without extra configuration. It provides pre-built interfaces, performance monitoring, and robust security measures such as guardrails and PII protection. Create a free account and start automating today.